ELLIS Talk: Implicit bias in machine learning

Date

Tuesday, October 31, 2023 14:00 - 15:00

Speaker

Ohad Shamir (Weizmann Institute)

Location

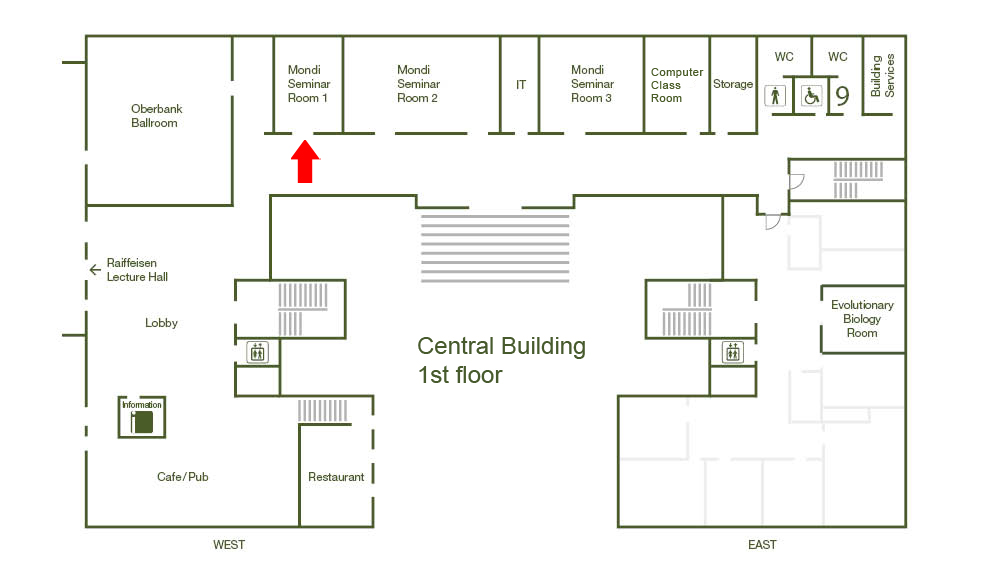

Central Bldg / O1 / Mondi 2a (I01.O1.008)

Series

Seminar/Talk

Tags

ELLIS talk

Host

Christoph Lampert

Contact

Most practical algorithms for supervised machine learning boil down to optimizing the average performance over a training dataset. However, it is increasingly recognized that although the optimization objective is the same, the manner in which it is optimized plays a decisive role in the properties of the resulting predictor. For example, when training large neural networks, there are generally many weight combinations that will perfectly fit the training data. However, gradient-based training methods somehow tend to reach those which, for example, do not overfit; are brittle to adversarially crafted examples; or have other interesting properties. In this talk, I'll describe several recent theoretical and empirical results related to this question.