Efficient and robust reversal learning

Date

Monday, January 29, 2024 11:30 - 12:30

Speaker

Angelika Steger (ETH)

Location

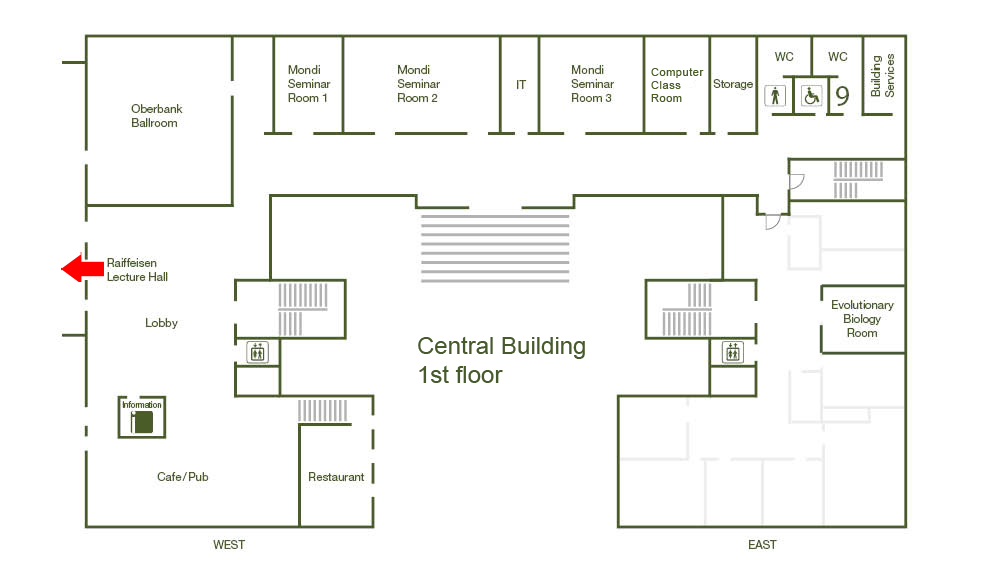

Raiffeisen Lecture Hall

Series

Colloquium

Tags

Institute Colloquium

Host

Uli Wagner

Contact

One of the defining characteristics of biological intelligence is its rapid adaptability to new environments, a trait explored in cognitive science through reversal learning tasks. In this talk we present a novel approach for the multi-armed bandit problem, the machine learning analogue of the reversal learning paradigm, that departs from the traditional method of estimating values. Instead, our method relies on continuously holding a belief about the best current option, coupled with a verification mechanism that periodically assesses this belief's validity. We demonstrate that our approach not only theoretically outperforms the best known algorithm but also explains well measured data across various experimental settings.