Learning to see in the Data Age

Date

Monday, October 2, 2023 11:30 - 12:30

Speaker

Alex Bronstein (Technion - Israel Institute of Technology)

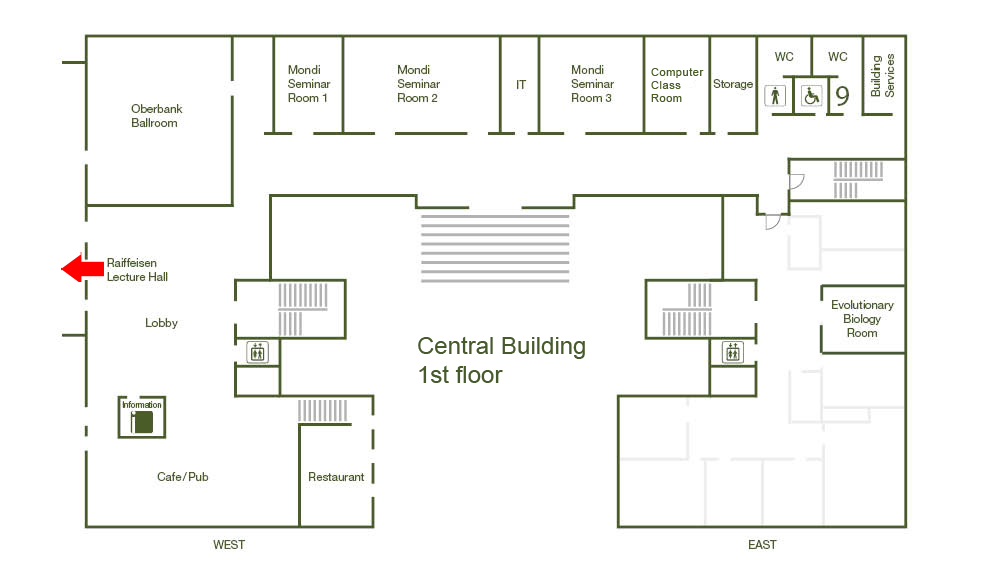

Location

Raiffeisen Lecture Hall

Series

Colloquium

Tags

Institute Colloquium

Host

Christoph Lampert

Contact

Recent spectacular advances in machine learning techniques allow solving complex computer vision tasks -- all the way down to vision-based decision making. However, the input image itself is still produced by imaging systems that were built to produce human-intelligible pictures that are not necessarily optimal for the end task. In this talk, we will try to entertain ourselves with the idea of including the camera hardware among the learnable degrees of freedom. I will show examples from optical, acoustic, magnetic resonance, and radar imaging demonstrating that simultaneously learning the "software" and the "hardware" parts of an imaging system is beneficial for the end task.