The Approximation Power of Deep Neural Networks: Theory and Applications

Date

Thursday, June 13, 2019 16:00 - 18:00

Speaker

Gitta Kutyniok (TU Berlin)

Location

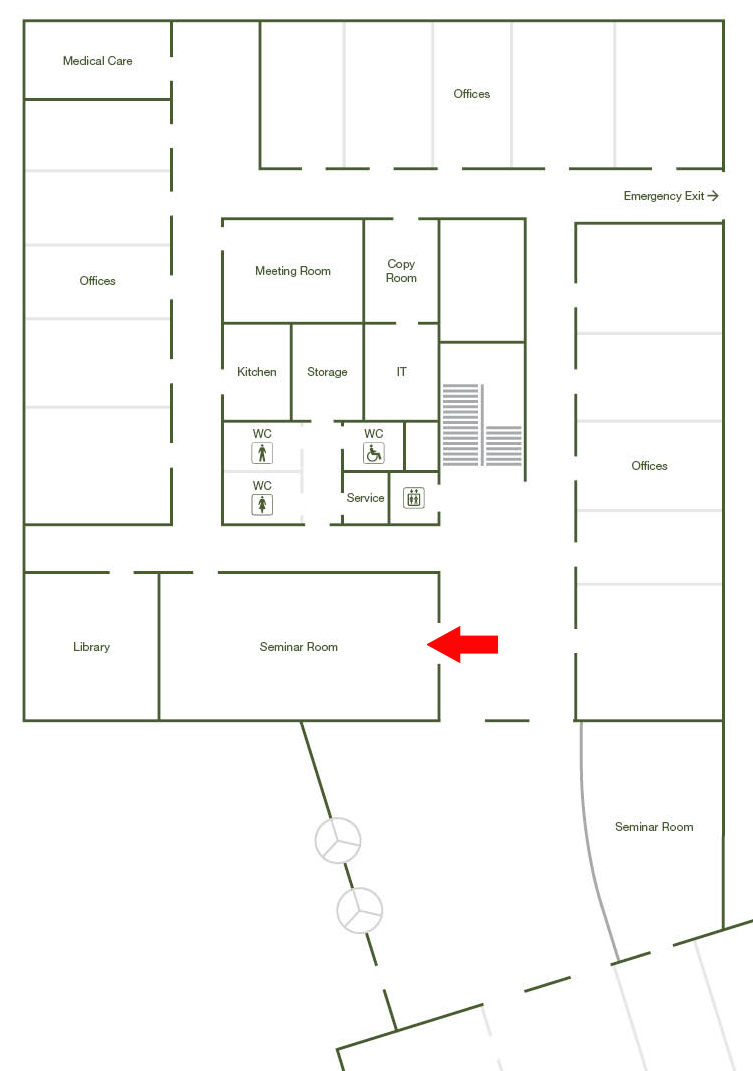

Big Seminar room Ground floor / Office Bldg West (I21.EG.101)

Series

Seminar/Talk

Tags

Mathematics and CS Seminar, mathematical_seminar_ics

Host

Laszlo Erdös

Contact

Despite the outstanding success of deep neural networks in real-world applications, most of the related research is empirically driven and a mathematical foundation is almost completely missing. The main goal of a neural network is to approximate a function, which for instance encodes a classification task. Thus, one theoretical approach to derive a fundamental understanding of deep neural networks focusses on their approximation abilities.

In this talk we will provide an introduction into this research area. After a general overview of mathematics of deep neural networks, we will discuss theoretical results which prove that not only do (memory-optimal) neural networks have as much approximation power as classical systems such as wavelets or shearlets, but they are also able to beat the curse of dimensionality. On the numerical side, we will then show that superior performance can typically be achieved by combining deep neural networks with classical approaches from approximation theory.